To start out, I want to assert that use cases are engineered efforts to detect issues. Hunts are ad hoc efforts to detect issues. You need both. In this blog, we’ll talk about the right approach to building a use case, and an often-neglected portion of that build effort: developing and deploying testing alongside the development and deployment of the detection capability. Ideally, the development of use cases would be a requirement for all (on-prem, cloud, etc.) new IT systems deployments. It’s an optimistic position to hold, but any company with a mature DevSecOps approach should consider the inclusion of use cases as part of that effort, even if they’re not a required output from the initial systems development. An output from hunting would be discovering good candidates for use cases. We’ll cover hunting in more detail in a future post (if you can’t wait, take a look at Crowley’s “Hunting by Numbers” youtube video.)

In the SOC-Class model, the steps for building a use case are:

- Identify the scenario and business relevance

- Decompose the scenario into elements

- Identify artifacts of data relevant to the elements

- Propose (and test) candidates of detection and differentiation within the data elements

- Select enrichment opportunities within the data

- Implement the use case

- Monitor and assess the performance of the implemented use case based on the detection and differentiation initially proposed

- Review and revise the components (scenario elements, data artifacts, detection candidates, enrichment) and expire them when there is no longer business relevance or a relevant threat

Many organizations lack a programmatic definition of use case development. Many are doing it in some form, but don’t have a clearly articulated strategy and tactical implementation of this fundamentally important activity of security operations. If you want more of the details related to how this fits into a larger program of security operations for a SOC, you should look at Crowley’s SOC-Class.

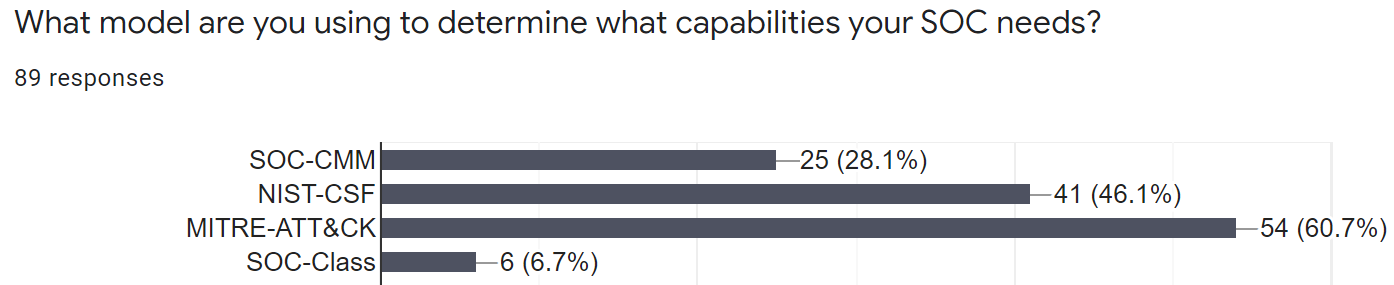

With the common reference to the ATT&CK framework as a maturity assessment capability, see figure 1 for its prevalence in the forthcoming SOC-Survey.com results, SOCs are tasked with assessing confidence in detecting a given tactic or technique expressed by an adversary. Without having tests in place to simulate those tactics and techniques, we can’t (honestly) assert confidence.

The mandate to verify our use cases in ongoing operations is part of our maturity self-assessment, as well as a solid approach to performance measurements. If the adversary behaviors are an unknown input into our environment (a reasonable assumption: we don’t know what the adversary is doing and we’re trying to find that activity), ongoing testing that simulates the adversary behavior is an effective way to assert ongoing confidence that our use cases are functioning as designed.

## Use Case Development Example The following discussion aims to showcase the utilization of the SOC-Class’s model to develop a use-case to detect malicious events targeting an organization user’s with coercive malicious documents delivered through phishing campaigns. With consideration to InQuest’s capabilities and mantra to “Prevent, Detect, Hunt”, it feels natural to aim to tackle a problem using our favorite pattern matching tool YARA.

### Identify the scenario and business relevance As we have already acknowledged, phishing is the predominant access vector for threat actors to carry out their attacks; it is irresponsible to think that it is possible to “Squish the Phish” or get that “click rate” down to zero. Concerning the colloquial security proverb, “Prevention is ideal, but detection is a must!”, InQuest has been following a variety of different techniques used in malicious documents.

On a consistent basis, threat actors are trying to convince the unbeknownst user to “enable macros” or “enabling content” to progress down the attack chain. One such method to detect these coercive lures is the implementation of Optical Character Recognition within the Deep File Inspection stack.

Another innovative technique is to anchor and pivot on Adobe Extensible Metadata Platform (XMP) identifiers (IDs) shared within these coercive lures. Unfortunately, there are a few scenarios where cleverly obfuscated embedded logic may still prove troublesome to detect and are misaligned with the previous detection techniques for graphical lures. Consider the following image that is coercing the recipient to “Enable Content” within a foreign language.

After considering the fact that these graphical lures are often utilized across campaigns and incorporated into a variety of different obfuscation/delivery frameworks, it may prove fruitful to develop a methodology for the use case of detection of maldocs using the images they contain with the obvious

Decompose the scenario into elements

Following the SOC-Class model, the next step for developing a use case would be to decompose the scenario into elements. The derived elements are the malicious documents themselves, the security tool, and the prospective targets of the phishing campaign within a perspective.

Identify artifacts of data relevant to the elements

The artifacts relevant to the malicious document elements that we plan on anchoring our detection logic on are the images embedded within the documents. We have been busy collating these graphical lures within our Malware Lures Gallery.

While some of these images and their respective parent files are well detected by OCR, XMP anchors and known by the AV community, some are not. With their probability of recurring in the future and emerging TTPS, the redundant or novel detection method could prevent the next incident or data breach.

Propose (and test) candidates of detection and differentiation within the data elements

In order to properly develop, test, and scale this technique, the detection rule will start with a smaller subset of data and the respective strings being used. For this venture, we will use the following maldocs to generate the use-case.

| Indicator Type | Sha256 Hash |Notes/Reports|

|Document Hash |ccf6d989bd33ecd81ee39f8a89ec72e5f27936a277d2ff41f4afe2d89060c770|InQuest Labs | VirusTotal

|Document Hash |63c8b6288a09b1ac43867bee20e5147e1251d589458f0a2f5686f66a47e0d259|InQuest Labs | VirusTotal

|Document Hash |d541874dd0e9d045f893a30c64cac85b5c9ecfa249d287d0378bc82199e35036|InQuest Labs | VirusTotal

|Document Hash |eb940285e68042df9c82c929ba87c3bd4c93e4c7969b34ab4f09f20f90a892a8|InQuest Labs | VirusTotal

|Document Hash |40e5e65bc8514eb8ac9c1b87b297c4c010e6934338cddac16eef5a8d3a756cf8|InQuest Labs | VirusTotal

After performing Deep File Inspection on the above files, the specific images of concern are identified.

$ find . -name '*' -exec file {} \; | grep -o -P '^.+: \w+ image'

./d541874dd0e9d045f893a30c64cac85b5c9ecfa249d287d0378bc82199e35036-pp/carving-001.png: PNG image

./40e5e65bc8514eb8ac9c1b87b297c4c010e6934338cddac16eef5a8d3a756cf8-pp/carving-001.png: PNG image

./eb940285e68042df9c82c929ba87c3bd4c93e4c7969b34ab4f09f20f90a892a8-pp/tge-zip-1-1/xl/media/image1.jpg.png: PNG image

./63c8b6288a09b1ac43867bee20e5147e1251d589458f0a2f5686f66a47e0d259-pp/tge-zip-1-1/word/media/image1.png: PNG image

./ccf6d989bd33ecd81ee39f8a89ec72e5f27936a277d2ff41f4afe2d89060c770-pp/ccf6d989bd33ecd81ee39f8a89ec72e5f27936a277d2ff41f4afe2d89060c770.stream-5.olefileio.jpg.png: PNG image

Here are the derived graphical lures for your viewing pleasure :)

Select enrichment opportunities within the data

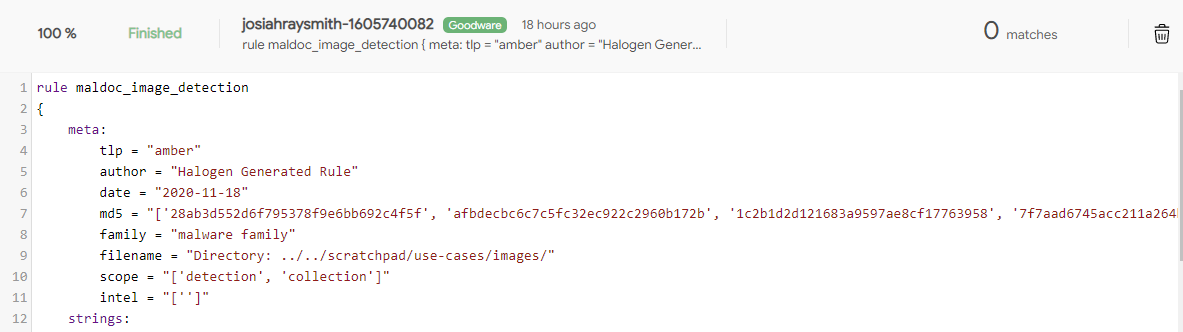

There are a variety of different techniques that can be used to have the images anchored into strings within a Yara Rule. This derivative will be using a nifty tool recently brought to our attention Halogen The resulting rule is output from the tool

rule halo_generated_a9b32fad32b4afb8cb3330c189fd7c87 : maldoc image

{

meta:

tlp = "amber"

author = "Halogen Generated Rule"

date = "2020-11-18"

md5 = "['28ab3d552d6f795378f9e6bb692c4f5f', 'afbdecbc6c7c5fc32ec922c2960b172b', '1c2b1d2d121683a9597ae8cf17763958', '7f7aad6745acc211a264bbc1350aed89', 'a9b32fad32b4afb8cb3330c189fd7c87']"

family = "malware family"

filename = "Directory: ../../scratchpad/use-cases/images/"

scope = "['detection', 'collection']"

intel = "['']"

strings:

$png_img_value_0 = {89504e470d0a1a0a0000000d49484452000000cd0000003a08020000009c494a9f000000017352474200aece1ce9000000097048597300000ec400000ec401952b0e1b0000201249444154785eed9d075c95d51bc77d}

$png_img_value_1 = {89504e470d0a1a0a0000000d49484452000000180000001808020000006f15aaaf000000017352474200aece1ce9000000097048597300000ec400000ec401952b0e1b000001d249444154384f63fcffffffb7efbfe6}

$png_img_value_2 = {89504e470d0a1a0a0000000d49484452000000180000001808020000006f15aaaf000000017352474200aece1ce9000000097048597300000ec400000ec401952b0e1b000002f549444154384f9d545d481451149e3b}

$png_img_value_3 = {89504e470d0a1a0a0000000d49484452000005550000027d0802000000baa0053d00000006624b474400ff00ff00ffa0bda793000000097048597300000ec300000ec301c76fa8640000800049444154780104c13d8e}

$png_img_value_4 = {89504e470d0a1a0a0000000d49484452000002df0000015b080200000082a175c0000000017352474200aece1ce90000ffca49444154785eecfd77971cc796de0b676596aff6dde8863704080284a13d7666ce68ace6}

$png_img_value_5 = {89504e470d0a1a0a0000000d4948445200000353000000fc0806000000921afe3a000000017352474200aece1ce90000000467414d410000b18f0bfc6105000000097048597300000ec200000ec20115284a80000094}

$png_img_value_6 = {89504e470d0a1a0a0000000d494844520000026e0000012c0802000000f5b2a8be00000006624b474400ff00ff00ffa0bda793000080004944415478daecfdf7775bc7963f0abe7f647e98796fde74b8d7b698903398}

condition:

any of them

}

Implement the use case

Before fully implementing the use case, there are a few potential mechanisms to frame the efficacy before the rule is released into production. Using the VirusTotal Hunting feature, the signature’s fidelity can be benchmarked against real-world traffic being submitted to VirusTotal via their Livehunt operations. Additionally, a Retrohunt procedure can be performed against the Goodware files-set, a corpus of non-malicious files. The Goodware rethrohunt finished without any matches and improved or assumed higher fidelity by proactively avoiding some false positives.

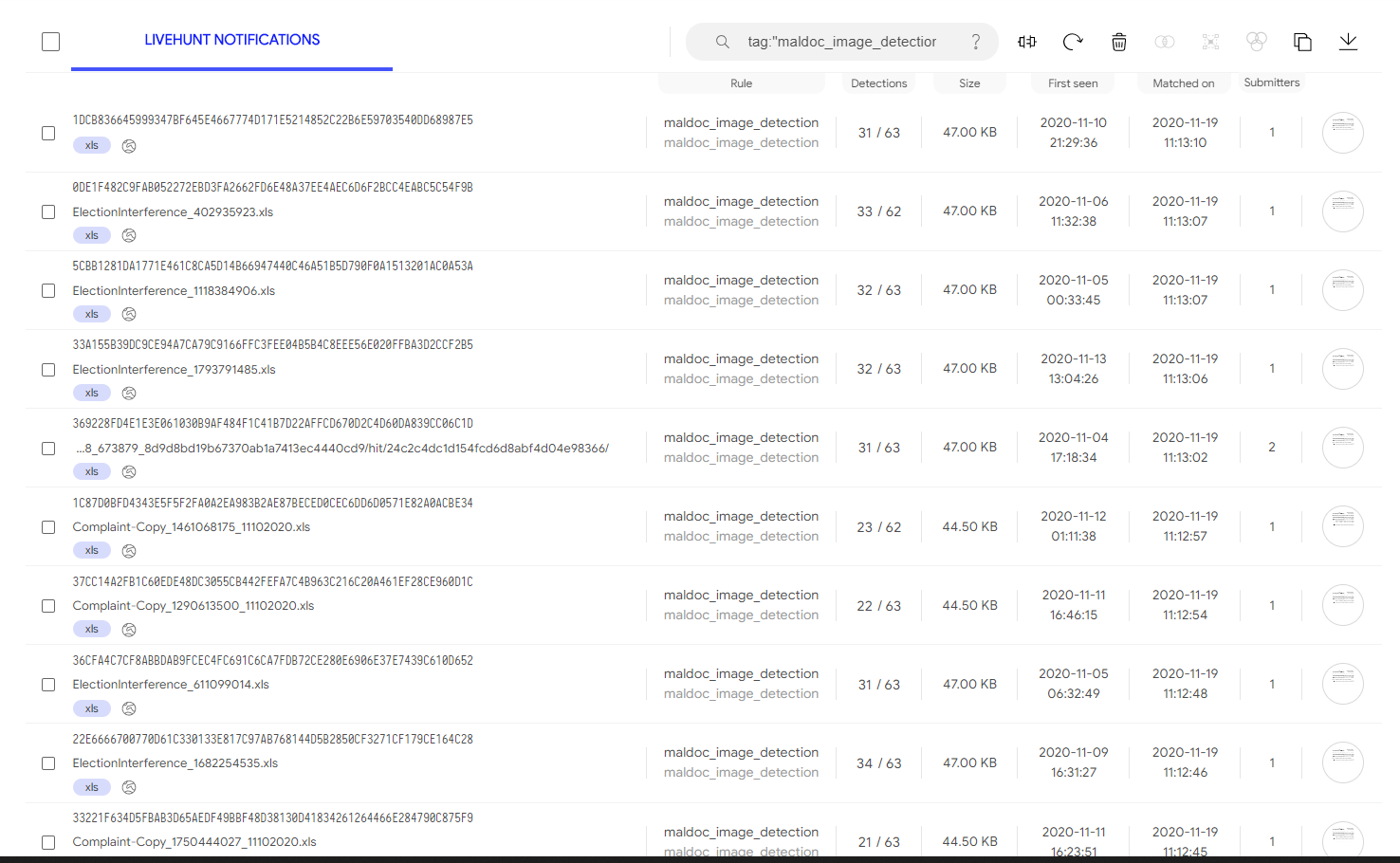

Monitor and assess the performance of the implemented use case based on the detection

After the detection capability has been released into the monitoring environment, the SOC-Class use case development framework suggests continuous monitoring and performance assessment of the given solution. Discussed are two potential methods of monitoring the performance of the developed detection method. The first is to leave the given rule-set within the VirusTotal Livehunt operation. As the image depicts, there is effective detection against malicious documents well known by the AV community

Another approach to monitoring the performance of a signature is to track the count through various dashboards. Measuring performance here is most effective against customer traffic through MSSP visibility. Other approaches to performance measurement are to continue to inspect high-volume data streams through services like MXmaildata

Review and revise the components

Following the SOC-Class model, the next step describes the necessity to review and revise the use-case components. For this specific process, let’s contrive the scenario where there were various false positives or benign true-positives against a subset of executable files. This oversight is easily correctable by changing the condition logic in our perspective rule. While it would be perfectly acceptable to add the MZ magic string and use it with a NOT condition, we will use this handy UInt()Trigger Generator for speed and performance. The resulting condition:

condition:

/* trigger = 'MZ' */

AND NOT (uint16be(0x0) == 0x4d5a)

Expire it when there is no longer business relevance or a relevant threat.

The SOC-Class model’s last step is to retire the use case when it is no longer relevant. For this specific scenario, if the detection with the image anchoring becomes non-actionable, or graphical coercive lures stop being used within phishing campaigns, there might not be a threat presented within the organization. The best practice shows there is a time-based review process for the efficacy and usefulness of these detection capabilities. While this time is arbitrary or independent of your organization, it should be employed and documented within the SOC’s Organizational policy.

About the SOC-Class/Guest Author

Christopher Crowley has 20 years of experience managing and securing networks, beginning with his first job as a Ultrix and VMS systems administrator at 15 years old. Today, Crowley is a Senior Instructor at the SANS Institute and the course author for SOC-Class.com. He works with various organizations across industries providing cybersecurity technical analysis, developing and publishing research, sharing expert security insights at conferences, and chairing security operations events.

The SOC-Class is a niche course on cybersecurity operations, training CISOs, SOC Managers, and technical leads to build and excel in Cybersecurity Operations Centers SOCs/CSOCs. This use case development methodology is one of the approaches discussed in the course and is intended to provide a framework for the mature and repeatable construction of engineered detections.

How Effective Is Your Email Security Stack?

Did you know, 80% of malware is delivered via email? How well do your defenses stand up to today’s emerging malware? Discover how effectively your email provider’s security performs with our Email Attack Simulation. You’ll receive daily reports on threats that bypassed your defenses as well as recommendations for closing the gap. Free of charge for 30 days.