For all the benefits of our increasingly digitally connected world, there are of course downsides. As the barrier between the kinetic and the digital grows more permeable, the potential damage of cyberattacks becomes increasingly severe. While in the past malicious attacks were limited to harming one’s bank account, some malware today aims to affect critical industries and infrastructure, including our supply chain and healthcare faculties. One recent example to consider is the nation-state-sponsored “Sandworm” teams’ attempt at causing an electrical blackout in Ukraine. Protecting against every growing potential avenue of attack becomes less and less tenable as technology (and in turn its vulnerabilities) grows exponentially.

This growth is further compounded by the inability of enterprises to find skilled cybersecurity researchers for a while now, spurred on by the pandemic pushing more of society’s infrastructure into the online world. This “talent gap” has led to many industries looking for outs in the form of technological assistance, hoping that proper analysis tools can help close this gap by covering the weaknesses of less trained malware analysts.

One of our mantras at InQuest is that “there is no silver bullet” and our platform is architected with this in mind. There are some great technologies that we both build on and integrate with and, where there are gaps, we engineer solutions. In a nutshell, we multiplex multiple technologies in tandem. Similarly, our open research portal labs.inquest.net empowers analysts to draw conclusions about a given sample through multiple lenses… easing the ability of researchers to sort, parse, and spot potential threats. Among the “lenses” to choose from you can find multi-AV results, heuristic indicators, extracted IOC reputation, and machine learning.

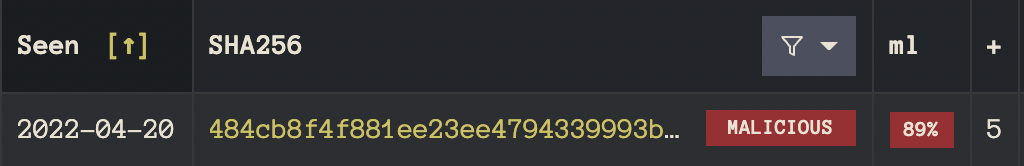

For example, say one was interested in finding particularly evasive samples for the purpose of deploying a timely YARA rule. Such samples by their nature are going to be difficult to catch; finding one of them will require cycling through several lenses to see which ones it shows upon. Consider the following sample, for instance:

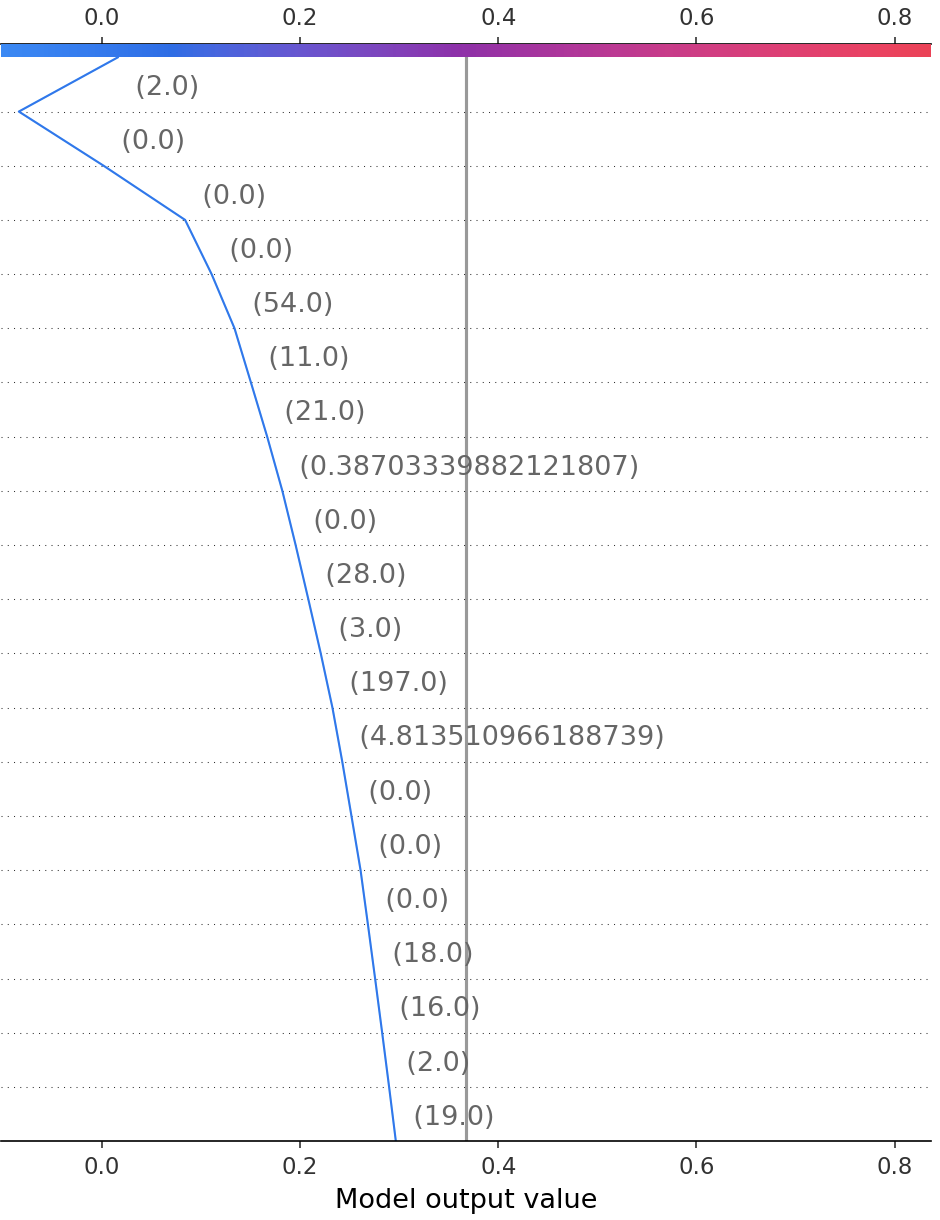

It has a multi-AV score of 5 out of 60, seemingly benign to most vendors, but suspicious enough to catch an eye. By switching our perspective to that of our Random Forest (RF) machine learning model, we see an 89% confident malicious label. This gives us further confidence that the sample we are about to spend precious human time on, is indeed worth dissection. Behind the scenes, a SHAP graph is leveraged to explain further why the ML model considered this sample to be dangerous. The suspicious features provide a human analyst with a map of what areas to look for. This portion of our technology stack is proprietary and not exposed through InQuest Labs, however, to further illustrate the value here, consider the following sample:

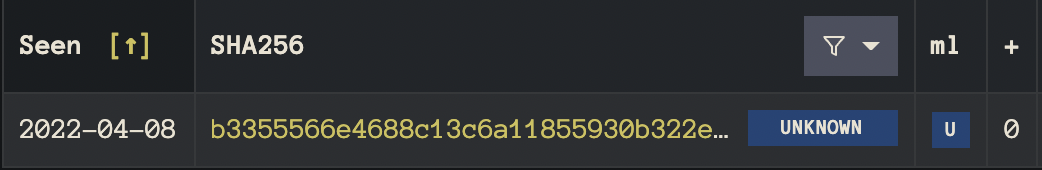

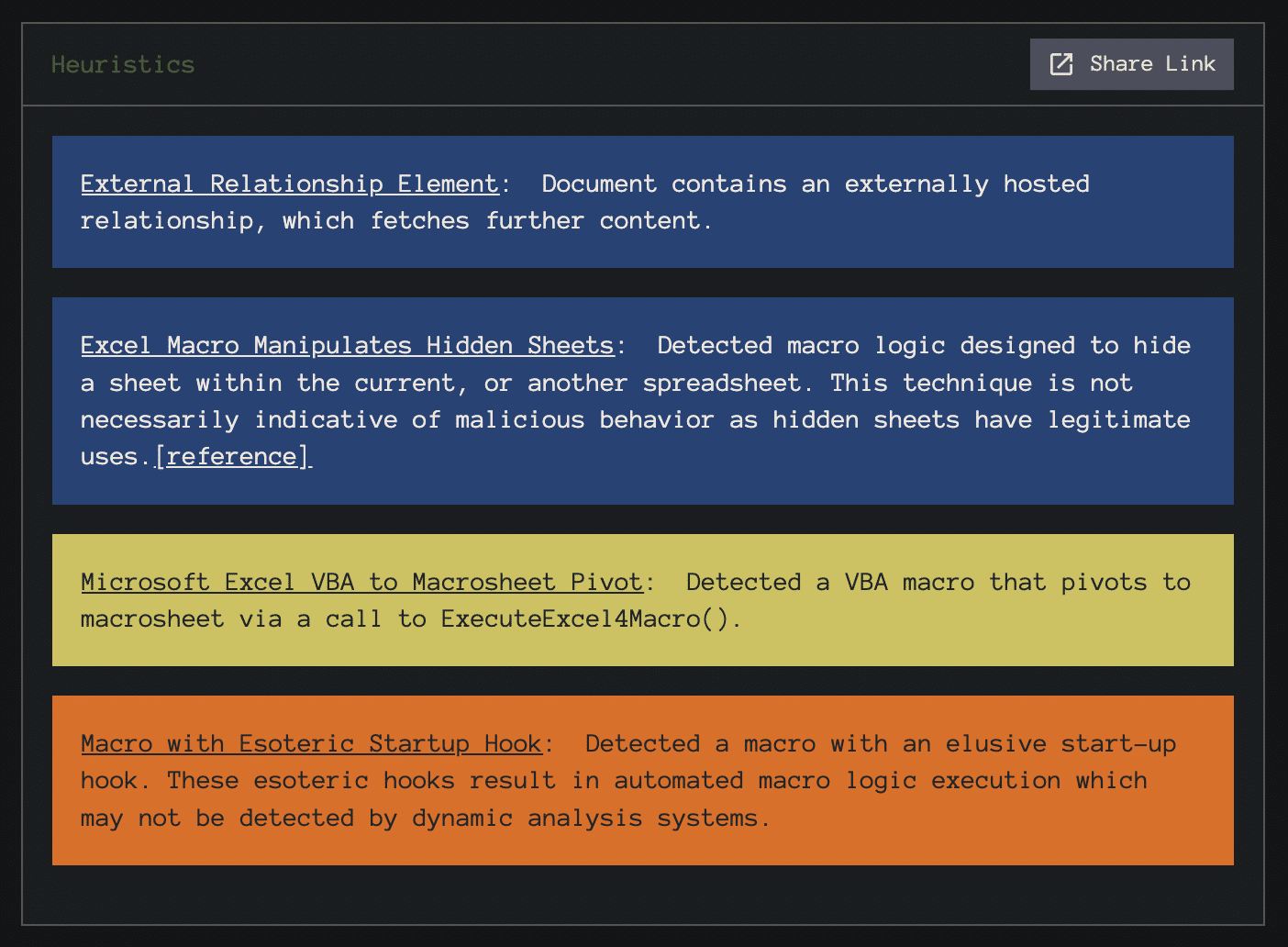

No hits on multi-AV and the ML classifier believes the sample to be benign, but some suspicious heuristics fire on the sample and catch our eye:

Let’s take a look at the human-readable explanation we derive from the SHAP graph programmatically:

All in all, this file looks safe to me. There are some strings in the macro that immediately got an eyebrow raise out of me. The number of days since this document was last modified falls into a common range. Document metadata looks normal. The usage of comments in this macro follows typical benign patterns. Highly confident that this file is benign.

The consensus between multi-AV and ML gives us confidence that this sample is indeed benign and not worth further dissection.

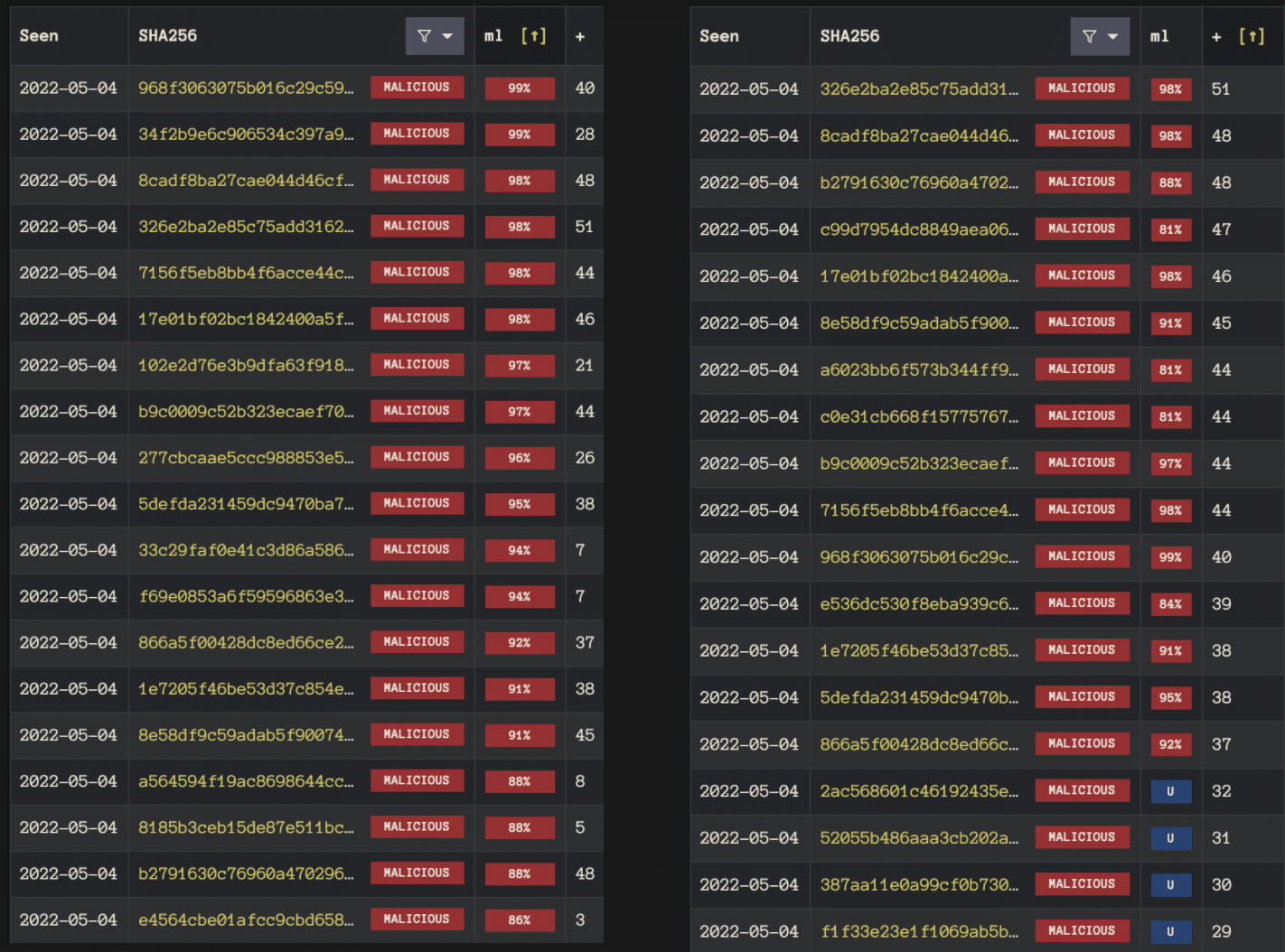

For a final real-world example, consider the value of leveraging the signal from one subsystem as the “squelch” for another. InQuest Threat Intel Analysts have authored some pretty incredible YARA rules for anchoring on new emerging threats. As great as these rules are, they tend to be noisy in real-world environments. How can we have the best of both worlds? We pair our hunt rules with explicit ML confidence levels to separate the signal from the noise. A confident level of 80% is required for standalone ML alerting… perhaps confidence as low as 5% is sufficient when paired with one of these bleeding-edge hunt rules.

The InQuest platform provides a virtual analyst atop these described “lenses” and more. We can deploy as a SaaS solution that enhances your Microsoft Office 365 (O365) or Google Workspace (GSuite) or as an on-premise high-speed network sensor. We integrate with SIEMs, multi-AV systems such as VirusTotal and OPSWAT, and dynamic analysis solutions, such as our partner Joe Security. Our threat scoring engine will intelligently push data and read results from these integrations dynamically, producing a high-speed and high-confidence malicious/benign label without human intervention. If you’re curious to hear more, we welcome you to reach out.

How Effective Is Your Email Security Stack?

Did you know, 80% of malware is delivered via email? How well do your defenses stand up to today’s emerging malware? Discover how effectively your email provider’s security performs with our Email Attack Simulation. You’ll receive daily reports on threats that bypassed your defenses as well as recommendations for closing the gap. Free of charge, for 30 days.